The short answer is, it depends.

Like it or not, we live in an age of “1’s and 0’s” and this digitization has made the creation of documents easier and the storage of those same documents easier and cheaper. I remember during the paper days trying to find space to put filing cabinets and store paper files. We can now fit what used to be thousands of filing cabinets on a single drive small enough to carry in a briefcase.

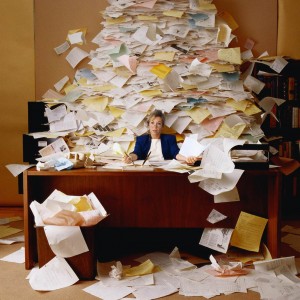

What this new technology has done creates an environment in which we produce more “documents” than we ever did before…because it seems easy:

We send an email to 10 people, 4 reply to us, we forward the replies to 8 people, 6 of the original recipients send a copy of the email to 8 other people.

We have now created what would have been 54 “documents” in the days of paper. We never would have done that in paper days because it would be inefficient and expensive to send all those copies and responses.

This easy document creation within corporations has resulted in enormous problems when lawsuits arise and “documents” and data must be reviewed and produced to other parties. For many years we have struggled with the most efficient, cost effective ways to cull through, review and produce these huge document collections.

A still popular method is using keyword searches to isolate appropriate responsive documents. This process takes certain words and phrases to search through documents; isolating those containing the keywords. From that point, human review is used to isolate and extract documents over which privilege is asserted. There are obvious problems with this method – manpower; the army required to review millions of documents can be overwhelming and the use of that human army exponentially increases the review error ratio.

But, there are a less obvious, more subtle problems with this approach as well. Misspelled keywords in documents can cause relevant documents to be completely missed; unless wildcard or alternative spellings are in the keyword collection. Alternative references can be a problem when the party producing the documents may refer to a project or thing by alternative names. For example, a search for a project called “Mustang” will miss relevant documents where the project is alternatively referred to by an inside name, such as “the horse”.

The courts and defendants have been thirsting for an economical and easy method for collecting, culling and producing documents – enter “predictive coding”. When using predictive coding a sampling or several samplings of the document collection is coded into the software by humans and while that coding is being entered, the software is evaluating the coding being entered by a human against the documents themselves. The theory is that the software begins to learn the types of information desired in the documents and is able to locate those documents best fitting the desired collection.

Does predictive coding work?

The short answer is, it depends.

Predictive coding seems to rely on two central tenets. The first is that the sampling batches chosen to begin training the software will accurately reflect a good cross section of the overall document universe. If those document samplings do, in fact, represent a true and accurate cross section, then the next step is to employ human attorneys to review and manually code the software; allowing it to learn as it progresses. Statistical sampling is then taken at various stages to determine the ratio of total documents to documents that reveal as being relevant to the litigation discovery requests.

The predictive coding process may be a very useful and economical tool, but it requires absolute participation by all parties. In fact, the optimal employment of the process would be for all the parties to agree on a third party vendor who could oversee and perform all aspects of the discovery coding. This alleviates potential problems in subjective document collections and in the coding process itself. This is, in fact, the procedure being used in the In Re: Actos multi-district litigation as I understand.

It may be that using a keyword search process before the predictive coding process could be helpful in very large document collections. Here again, though, a third party might be better in accumulating, distilling and obtaining agreements on keyword and phrases to be used.

If we are to achieve the primary goal of defendants; to perform document collection, culling and review at the lowest possible price; and we are to achieve the plaintiff’s goal of receiving the most relevant and complete production of data these tools may be invaluable. Predictive coding may be invaluable.

What seems to be going wrong in a great many cases is a failure of the parties to cooperate. In some cases, parties are proceeding with using one technique or another without any agreements at all. In other cases, parties are not all participating in and agreeing on the entire process and the methods to be used.

At the end of the day, it is about cooperation, agreement and the efficient use of technology.

Share This