NHTSA Chairman Voices Life-or-Death Concerns About Autonomous Vehicles

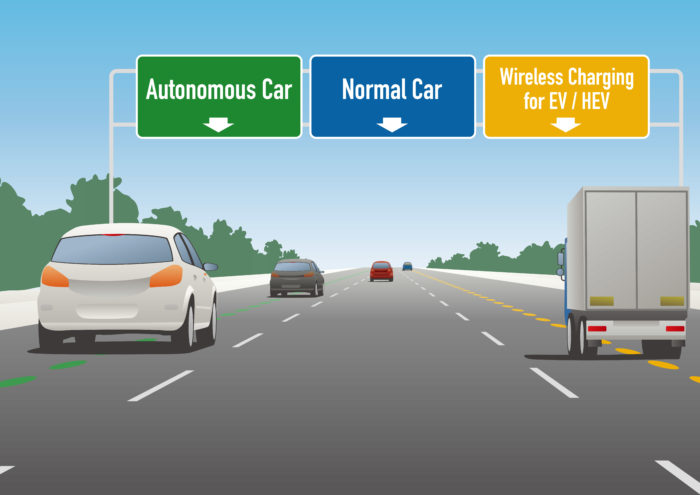

By 2020, self-driving cars will roam the nation’s highways and byways rampantly. Automotive-industry experts, with executives at technology companies like Google and Uber, predict the year will unleash the decade of autonomous vehicles.

Dozens of companies are in a road race to bring their versions of auto-piloted cockpits to the market, including most major motor-city manufacturers. Others already have prototypes on the pavement – Audi, Daimler, Honda and Tesla, to name a few. Even Apple is rumored to be in development of a robotic engine but has yet to confirm details.

With all the high-tech hype surrounding a futuristic inevitability, there remains an element of uncertainty for safety. How will autonomous vehicles react in an accident situation? What will they do if a collision is about to occur? Who will be programming the software that makes such decisions?

An article in MIT Technology Review titled “Top Safety Official Doesn’t Trust Automakers to Teach Ethics to Self-Driving Cars” addresses the serious subject. In it, National Transportation Safety Board Chairman Christopher Hart says federal regulations must be part of the solution, as will the incorporation of certain fail-safes similar to those in passenger aircraft.

“That to me is going to take a federal government response to address,” Hart says.

The agency has been in discussions since January 2016 about regulating autonomous vehicles on a logistical level but hasn’t really delved into the life-or-death scenario described by its leader.

“The rapid development of emerging automation technologies means that partially and fully automated vehicles are nearing the point at which widespread deployment is feasible,” reads an agency policy statement. “Essential to the safe deployment of such vehicles is a rigorous testing regime that provides sufficient data to determine safety performance and help policymakers at all levels make informed decisions about deployment.”

Ryan Calo, an assistant law professor at the University of Washington in Seattle, remains skeptical about anyone’s ability to program ethics into autonomous vehicles.

“If it encounters a shopping cart and a stroller at the same time, it won’t be able to make a moral decision that groceries are less important than people,” Calo says. “But what if it’s saving tens of thousands of lives overall because it’s safer than people?”

Another source interviewed by MIT Technology Review, a philosophy professor at San Luis Obispo’s California Polytechnic State University, says that while artificial intelligence only can go so far, the possibility of an autonomous vehicle making a moral decision is not outside the realm of possibility.

“It’s better if we proactively try to anticipate and manage the problem before we actually get there,” Patrick Lin says. “This is the kind of thing that’s going to make for a lawsuit that could destroy a company or leave a huge black mark on the industry.”

Share This